Under the Hood

Netcdf Editor Application architecture

When talking about the Netcdf Editor Application, it is composed of 2 underlying applications :

- Single Page Web App - for editing netcdf files

- Multi Page Web App - for preparing boundary conditions for CM5A2 Earth System Model used for deep time climate simulations.

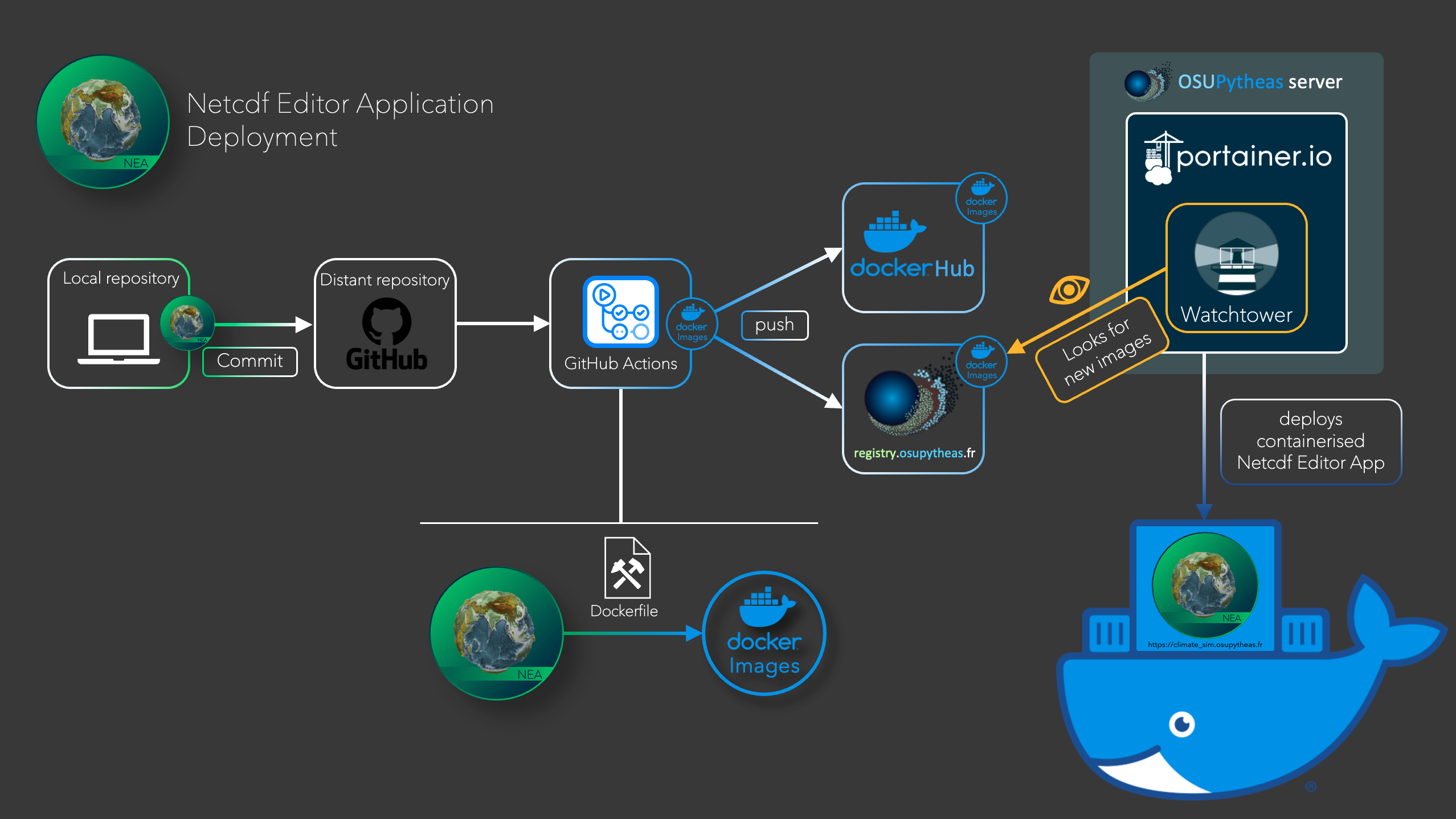

Deployment workflow (CICD)

CICD stands for Continuous Integration and Continuous Deployment

Here is a scheme representing the workflow:

The automated workflow is the following:

- When there is a change on the

mainbranch (either by direct commit or a pull request) GitHub actions are triggered:- Python tests: ensure the code works (check python-app-flask-test.yml file on GitHub)

-

Build Docker Images (check docker-image.yml file on GitHub)

The latter one builds the images and then pushes them to:

- DockerHub with the tag

latest. This means that anyone can easily install the application (but there is a limit on the number of image pulls on dockerhub free 200?).If you want more information about the dockerHub images management, check DockerHub section in maintenance documentation. - OSU Infrastructure at registry.osupytheas.fr (you need an osu account to access this) with the tag

latestand a tag with the GitHub commit number (previous versions are stored here). This is the preferred place to get the images as there is no limiting and is on local infrastructure.If you want more information about the registry images management, check registry.osupytheas.fr in maintenance documentation..

- DockerHub with the tag

- WatchTower is used on the OSU infrastructure (referent: Julien Lecubin) where the app is deployed in order to monitor (certain) images every 5 minutes. If a new version is available, the new image version will be downloaded and portainer will stop the container using the old image and redeploy a new container using the new image with the same configuration as the previous container.

Single Page Web App

The Single Page is composed of the Panel framework which is a dashboarding technology for python. It allows to interact with netcdf files with ease without caring of the code necessary to load, vizualize and modify the data. This application has been reused for the Multi Page (check here for more details).

Multi Page Web App

Database

This is not a service (container by itself) but shared (via a volume) between the different containers. The Data base currently holds 4 tables:

- User: Stores username + password

- data_files this is the base table that is used for url endpoints on each upload a new id is given. It stores the uploded filename as well as some basic metadata info mainly the longitude and latitutde coordinates.

- revisions: Holds the different revision data and file types that have been seen. Each time a processing step is finished a new file is saved. The file has a file type (these can be seen in assets) and a revision number. We store filepath (all files are saved in the

instancefolder) rather than the netcdf file in a binary format -> this means we can accidentally delete files and become out of sync. - steps: used to store the different processing steps and the options that were used to run the task.

Flask

Flask is a python library that is used to create multipage web apps. It handles the routing (different endpoints) and requests. Being written in python it can execute python code before rendering HTML templates written in jinja template language.

The main goal of this container is to display the different webpages, do simple computations used for visualisations and handle requests.

Panel

Panel is the tool that is used to create the Single Page. It is useful for creating rich in page interactivity and processing easily.

The Single Page has been slightly refactored and then reused notably for:

- Internal Oceans

- Passage Problems

- Sub basins

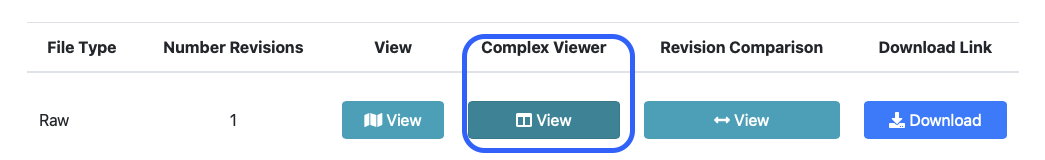

- Complex Viewer (The complex viewer is a port of the Single Page and can be used to quickly and easily modify any file)

The source code for these fields are in Multi_Page_WebApp/python_tools/climpy/interactive

After saving, the revision count will grow by one and you can download the file.

After saving, the revision count will grow by one and you can download the file.

Nginx

Nginx is a reverse proxy this is used to dispatch requests between flask and panel containers.

This is also the only entry point (open endpoint) to the app as the containers “collaborate” on an internal network.

We have also:

- Boosted the timeout to 1d → This is to support the case of blocking a webpage during a long process

- Set upload size to 200Mb (this can be made bigger if needed)

RabbitMQ

Rabbit is a messaging broker, we can setup multiple queue which store “tasks” from the UI (flask app or other) then interprete these.

Being the message broker this is where all the messages are stored and where the consumers connect to.

Message Dispatcher

In messaging terms this is a consumer. To decouple the UI from the implementation we use this service. For example the interface may say something like regrid this the message dispatcher interprets this message then dispatches it to the correct queue, this also means we can change backend calculations seamlessly from the frontend, in this example regridding is carried out in python so the dispatcher will send the appropriate message to the python task queue. This is very important because it allows executing code in different environments, Python, Fortran ….

Another key feature of the message dispatcher is that it receives *.done messages, effectively the processing jobs saying I have finished processing this task. With these messages it looks in constants.py to see if this step invalidates other steps and sends the message to the appropriate queue. This is very important because it means we can create processing pipeline and dynamically update files when previous steps are carried out!

Python Worker

The python worker holds a collection of algorithms written in Python. The algorithms can be found in Multi_Page_WebApp/netcd_editor_app/utils and have been decoupled from the interface meaning they can be executed separetly (outside of the App).

Routines include:

- geothermal heatflow calculations according to Fred Fluteau script

- PFT file creation

- Routing file calculations following a modification of Jean-Baptise Ladant’s code

- ahmcoeff (used to augment ocean flow)

We can easily scale workers with --scale python_worker=X to create multiple instances and carry out compute in parallel.

Messaging

It was decided to use a messaging queue to facilitate (processing) communications between containers.

The basic workflow is:

- UI sends a message to carry out a task.

- Message Dispatcher receives the message and sends it to the correct task queue currently:

- Python

- Fortran

- Panel

- A worker picks up the task and carrys out the process

- When done the worker sends a message to the dispactcher saying it has finished

- The dispatcher looks to see if any other steps depend on this step and send a message -> Go to step 2

- Processing stops when all steps have finished and the dispatcher does not send out any new messages

Advantages of this implementations are:

- Pipeline calculations (see above)

- Steps can be run in parallel

- Steps can be run in different enviroments -> a consumer can be written in any language we simply need to tell the dispatcher that these messages should be sent to a given queue

GitHub side

GitHub actions

GitHub actions are automated workflows which are defined by files located here on GitHub website.

docker-images.ymlis the workflow which produces the images ans push them to the Osupytheas registry and DockerHub.python-app-flask-test.ymlare python tests to make sure implementations will not break up the application.

Docker side

The Docker technology is used for the deployment of the application. It is a platform allowing to create and manage containers which are self-contained elements gathering the source code as well as the necessary libraries. Thanks to the standardized format of the containers, it is very convenient to launch and manage the application.

-

The single app is deployed in one Panel container.

-

As the Multi app is more complicated, it is deployed through several containers, one for each main element of the app:

- Message_broker

- Message_dispacher

- Ngnix

- Panel

- Flask

- Panel

- Python_worker

- MOSAIC

- MOSAIX

As shown in the scheme here a dockerFile is used to create docker images of the application. Theses images are then used to build the containers.

If you want more informations on how it works, check the official docker documentation.

Useful commands

-

Relaunching containers from scratch (stop and remove ALL containers, delete them, remove images and relaunch the application in dev mode)

Delete all containers and images:

docker kill $(docker ps -q) && docker rm $(docker ps -qa) && docker rmi $(docker images -q)

Use development containers

Direct feedback of your code modification on the locally deployed application can useful when you want to add features or fix bugs. This way you only need to refresh the internet page to see code changes instead of having to delete and redeploy the container.

In the application, this is made possible by the specific usage of the volume option for the different containers in the docker-compose.dev.yaml. Example for the Flask container:

volumes:

- ./Multi_Page_WebApp:/usr/src/appHere, the container won’t be a “self-contained-closed-box”, your host directory on your local machine (./Multi_Page_WebApp) will be mounted as a volume for the container (at this location /usr/src/app) meaning the code inside the container reflects any changes that are made on your local machine.

Python side

Important paths and files :

- Main Python script:

netcdf_editor_app/Multi_Page_WebApp/flask_app/climate_simulation_platform/app.py - database operations (save, edit nc file, etc…):

netcdf_editor_app/Multi_Page_WebApp/flask_app/climate_simulation_platform/db.py - Python code related to processings (coefs computation, routing):

netcdf_editor_app/Multi_Page_WebApp/python_tools/climpy/bc/ipsl - Python code related to data visualization (Panel):

netcdf_editor_app/Multi_Page_WebApp/python_tools/climpy/interactive

Data location

There are two important locations for data:

- On Osupytheas server →

/mnt/srvstk1d/docker/data/climatesimis the Docker volume on which are stored data - On Flask container →

/usr/src/app/instanceis the mounting point of the Docker volume

Therefore accessing to

/usr/src/app/instanceon Flask container allows to see what is inside/mnt/srvstk1d/docker/data/climatesimon Osupytheas server

After the loss of database Julien dedicated a new space on Osupytheas server for data to be stored: /mnt/srvstk1d/docker/data/climatesim

Wesley updated the Docker-compose of Portainer by (end of the file):

volumes:

instance_storage:

driver: local

driver_opts:

o: bind

type: none

device: /mnt/srvstk1d/docker/data/climatesim